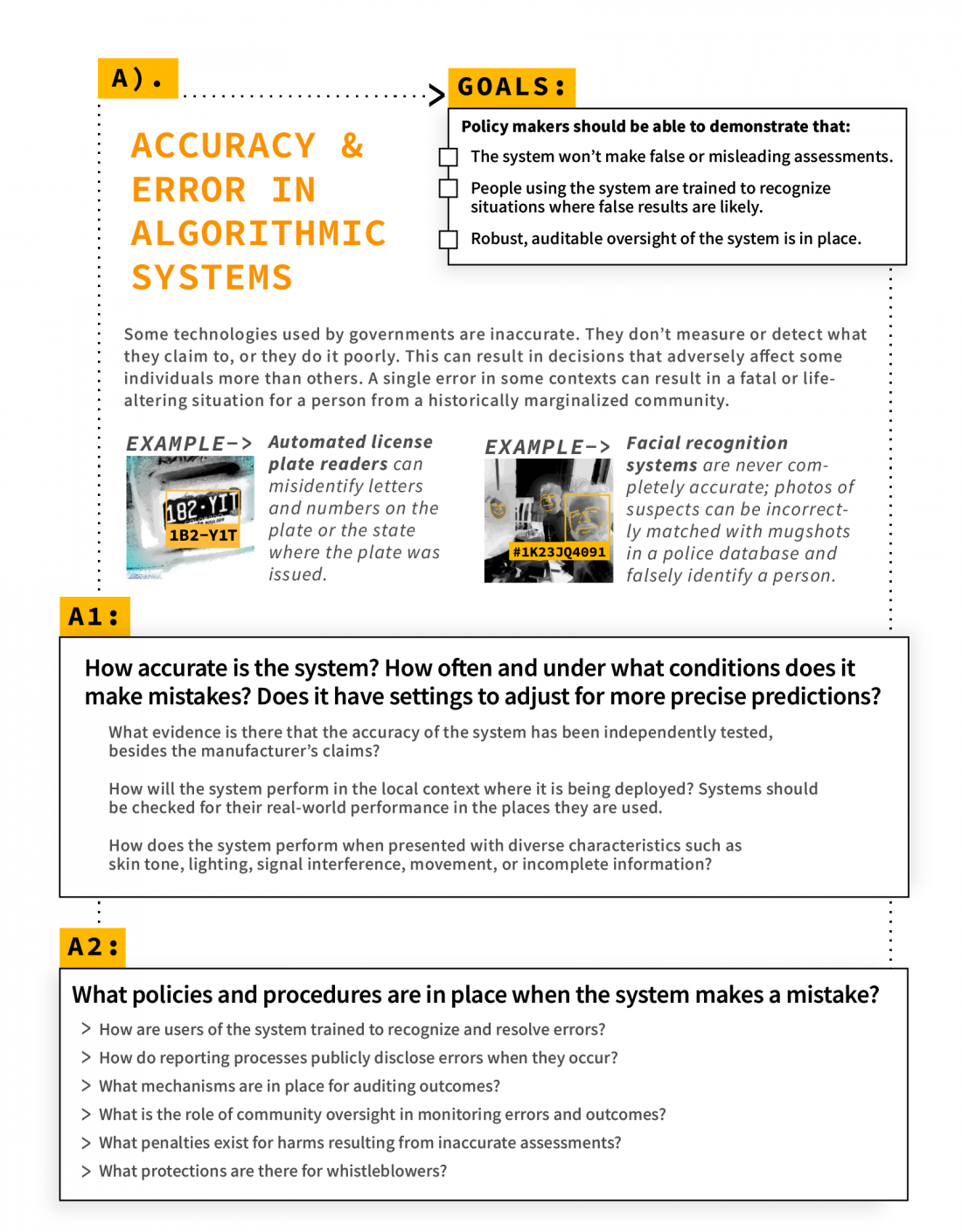

Automated decision systems make mistakes, and the types of mistakes they make can put people with marginalized identities at increased risk. These technologies are not always necessary. Some systems may be too invasive or risky by design, which is enough reason to reject a system outright. To find out more about a specific automated decision system, ask these questions to government employees, elected officials, and vendors.

or download the full AEKit here:

For additional resources, see our list of frequently asked questions.

Created in collaboration with the Critical Platform Studies Group, the Tech Fairness Coalition, and the ACLU of Washington. It was initiated as a project at the Data Science for Social Good Summer Program run by the eScience Institute at the University of Washington. For a full list of our contributors, click here.

Graphic design, web design, and content strategy by micah epstein.

Graphic design, web design, and content strategy by micah epstein.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.