Published:

Wednesday, February 16, 2022Every day, corporations and governments use algorithms, or complex and often non-transparent formulas, to make decisions about our lives. These decision-making formulas, also called automated decision systems, are difficult to examine for flaws and biases because they are hidden from review by trade secrets and other barriers. Yet, they have clear impacts on the lives of everyday people, especially on the lives of the most marginalized and vulnerable community members—low-income folks, Black people, Indigenous people, people of color, and people with disabilities.

Even more troubling, those whose lives are affected by automated decision systems lack meaningful ways to challenge inaccuracies and biases or even understand how the systems work. Below, we will examine how non-transparent and unaccountable algorithms can undermine access to one of the most basic human needs: housing.

This is just one of many stories where people of color are disproportionately denied access to homeownership by lenders, and often, algorithms are to blame. An investigation by the Markup found that in 2019, lenders were more likely to deny home loans to people of color than to white people who looked identical on paper, except for their race. In fact, lenders rejected high-earning Black applicants with less debt at higher rates than high-earning white applicants with more debt.

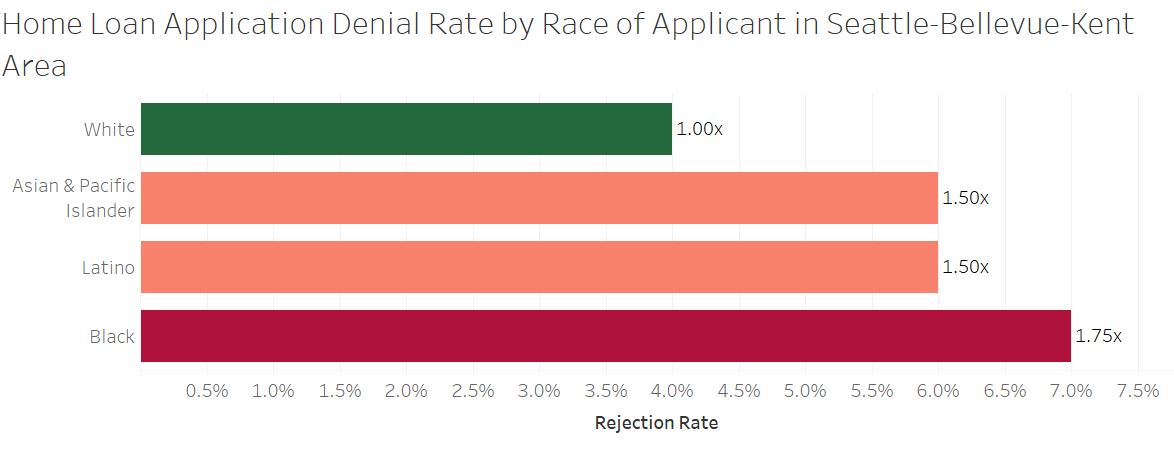

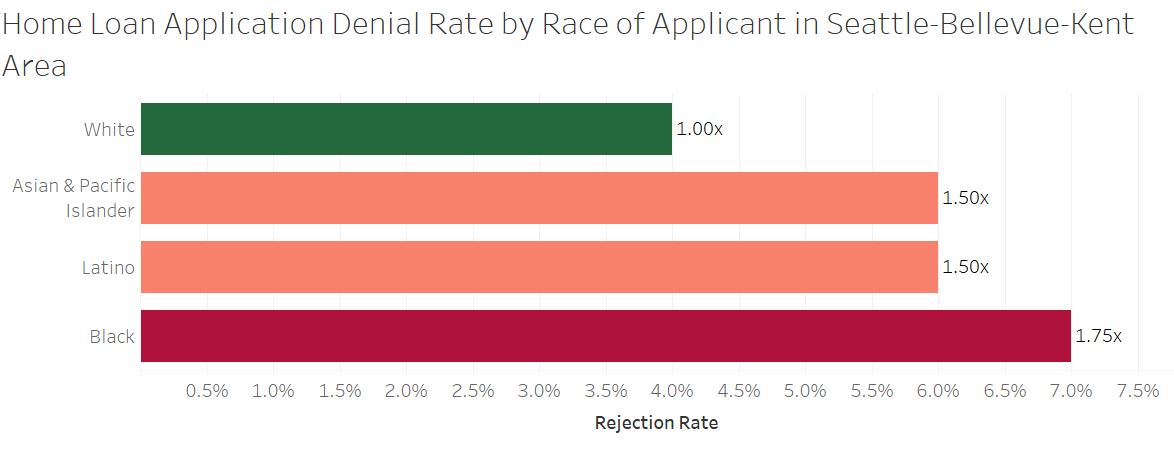

In the Seattle-Bellevue-Kent area, Black, Asian and Pacific Islander, and Latino applicants are denied at significantly higher rates than white applicants with similar financial qualifications. According to data obtained from the Markup, a Black applicant in this region is 75% more likely to be denied than a similarly situated white applicant. Latino and Asian and Pacific Islander applicants are 50% more likely to be denied than a similarly situated white applicant.

The racial disparities in home loan application denials are made all the more troubling because home ownership is a key way to build generational wealth, and one that Black people have historically been denied. Barriers to home ownership, both past and present, are an important reason for the persistent wealth gap between Black families and white families. Thus, discriminatory decisions about home loan applications have profound ramifications that reverberate beyond the denial of the loan itself.

Many loan application decisions are based on algorithms that are completely hidden, so potential borrowers are unaware of why their applications are rejected. Mysterious automated underwriting software programs, as well as a credit-scoring algorithm called FICO, create decisions that affect us every day.

The little that we do know about FICO scoring and underwriting algorithms is concerning. Redlining and racial covenants have historically excluded people of color from home ownership, but FICO awards credit only for a history of mortgage payments and not for rental history. These systems therefore perpetuate a legacy of discrimination and systemic racism in housing into present day.

Although potential borrowers have some ability to contest individual errors used in both credit scoring algorithms and loan underwriting algorithms, they have no ability to challenge the way in which data are weighed or the validity of the conclusions drawn, because the credit scoring and underwriting algorithms that make decisions about people’s loans are secret. According to the Markup, not even FICO’s regulator, the Federal Housing Finance Agency, knows exactly how these algorithms score applicants.

The North Carolina couple pushed back against the algorithm after they were initially denied a home loan for reasons that apparently did not apply to white applicants. Their rejection was eventually reversed but only with the help of their real estate agent and after they had their employer send multiple emails to the lender to verify employment. It remains unclear why they were rejected in the first place, and many others may not be so successful in fighting against a discriminatory algorithm.

As with home ownership, many aspects of tenant screening reports perpetuate systemic racism. Communities of color are disproportionately targeted by police and incarcerated, but even just an arrest record without a conviction is often enough for a tenant screening algorithm to bar someone from housing. Additionally, Black renters are much more likely than white renters to have an eviction action filed against them — and the mere filing, regardless of its validity, can disqualify someone from getting housing.

Tenant screening reports also suffer from frequent errors. Reports use algorithms to “scrape” information from state and county records to report purported facts like evictions, arrests, convictions, and debt collection actions. Because these algorithms are designed to “not miss anything,” they can include inaccurate information. There is little to no effort, for example, to make sure that the John Doe evicted in Wyoming is the same John Doe being reported on in Washington. Even more egregious, many algorithms search not only for the person’s name, but for variants of that name. “Anthony Jones” may be searched as “Ant* Jones”, resulting in a person being tagged not only as Anthony Jones, but also Antony Jones, Antonio Jones and Antoinette Jones. The tenant screening companies do little to confirm these “matches,” even when birthdate and residences don’t match. Given that many people share last names, the possibilities for wrongful identification are extreme.

Even when the person can technically review and challenge the underlying information, that right is not meaningful. By the time a correction is made, the apartment is gone, and a different tenant screening company may do the next screening and repeat the same error.

Additionally, the tenant screening company is under no obligation to make sure that an adverse event is valid or justified. A landlord could falsely report to credit agencies that a previous tenant owes money, and regardless of the claim’s validity, this would be reported in the screening report as landlord debt. Similarly, the mere filing of a judicial eviction action, regardless of its justification and regardless of whether it resulted in an eviction, is reported as an eviction action.

The solution is to pass legislation requiring transparency, testing, and accountability: transparency so that people know what information is being used and how it is being weighed, testing to determine and correct bias in the calculation, and accountability so that consumers have meaningful and effective remedies for errors.

Here in Washington, we worked with Senator Hasegawa to introduce a first-of-its-kind algorithmic accountability bill (SB 5116) that would prohibit discrimination via algorithm and require government agencies to make transparent the automated decision systems they use. As we continue to advocate for the passage of a strong algorithmic accountability law, we encourage you to talk with your communities and lawmakers sharing concerns about the impacts of automated decision systems and supporting strong legislation. We encourage you to use the Algorithmic Equity Toolkit as a guide in those conversations.

For more information, contact [email protected].

Even more troubling, those whose lives are affected by automated decision systems lack meaningful ways to challenge inaccuracies and biases or even understand how the systems work. Below, we will examine how non-transparent and unaccountable algorithms can undermine access to one of the most basic human needs: housing.

Algorithms and Home Ownership

In 2019, a North Carolina couple found their dream home, but just two days before they were scheduled to sign their mortgage documents, they were denied for the loan. They had saved more than enough for the down payment, had good credit scores, and earned around six figures each. Despite these factors, they were told they didn’t qualify because one of them was a contractor and not a full-time employee. Their colleagues, however, were also contractors, and yet they had mortgages. The key difference? The co-workers are white. And this couple is Black.This is just one of many stories where people of color are disproportionately denied access to homeownership by lenders, and often, algorithms are to blame. An investigation by the Markup found that in 2019, lenders were more likely to deny home loans to people of color than to white people who looked identical on paper, except for their race. In fact, lenders rejected high-earning Black applicants with less debt at higher rates than high-earning white applicants with more debt.

In the Seattle-Bellevue-Kent area, Black, Asian and Pacific Islander, and Latino applicants are denied at significantly higher rates than white applicants with similar financial qualifications. According to data obtained from the Markup, a Black applicant in this region is 75% more likely to be denied than a similarly situated white applicant. Latino and Asian and Pacific Islander applicants are 50% more likely to be denied than a similarly situated white applicant.

The racial disparities in home loan application denials are made all the more troubling because home ownership is a key way to build generational wealth, and one that Black people have historically been denied. Barriers to home ownership, both past and present, are an important reason for the persistent wealth gap between Black families and white families. Thus, discriminatory decisions about home loan applications have profound ramifications that reverberate beyond the denial of the loan itself.

Many loan application decisions are based on algorithms that are completely hidden, so potential borrowers are unaware of why their applications are rejected. Mysterious automated underwriting software programs, as well as a credit-scoring algorithm called FICO, create decisions that affect us every day.

The little that we do know about FICO scoring and underwriting algorithms is concerning. Redlining and racial covenants have historically excluded people of color from home ownership, but FICO awards credit only for a history of mortgage payments and not for rental history. These systems therefore perpetuate a legacy of discrimination and systemic racism in housing into present day.

Although potential borrowers have some ability to contest individual errors used in both credit scoring algorithms and loan underwriting algorithms, they have no ability to challenge the way in which data are weighed or the validity of the conclusions drawn, because the credit scoring and underwriting algorithms that make decisions about people’s loans are secret. According to the Markup, not even FICO’s regulator, the Federal Housing Finance Agency, knows exactly how these algorithms score applicants.

The North Carolina couple pushed back against the algorithm after they were initially denied a home loan for reasons that apparently did not apply to white applicants. Their rejection was eventually reversed but only with the help of their real estate agent and after they had their employer send multiple emails to the lender to verify employment. It remains unclear why they were rejected in the first place, and many others may not be so successful in fighting against a discriminatory algorithm.

Algorithms and Rental Housing

Like banks’ mortgage decisions, landlords’ use of computer-generated “tenant screening reports” to grant or deny tenancy is just as opaque and unfair. Tenant screening reports are created by an unregulated industry of more than 2,000 companies. Their reports often include FICO scores, with all the flaws discussed above, but the problems only start there.As with home ownership, many aspects of tenant screening reports perpetuate systemic racism. Communities of color are disproportionately targeted by police and incarcerated, but even just an arrest record without a conviction is often enough for a tenant screening algorithm to bar someone from housing. Additionally, Black renters are much more likely than white renters to have an eviction action filed against them — and the mere filing, regardless of its validity, can disqualify someone from getting housing.

Tenant screening reports also suffer from frequent errors. Reports use algorithms to “scrape” information from state and county records to report purported facts like evictions, arrests, convictions, and debt collection actions. Because these algorithms are designed to “not miss anything,” they can include inaccurate information. There is little to no effort, for example, to make sure that the John Doe evicted in Wyoming is the same John Doe being reported on in Washington. Even more egregious, many algorithms search not only for the person’s name, but for variants of that name. “Anthony Jones” may be searched as “Ant* Jones”, resulting in a person being tagged not only as Anthony Jones, but also Antony Jones, Antonio Jones and Antoinette Jones. The tenant screening companies do little to confirm these “matches,” even when birthdate and residences don’t match. Given that many people share last names, the possibilities for wrongful identification are extreme.

Even when the person can technically review and challenge the underlying information, that right is not meaningful. By the time a correction is made, the apartment is gone, and a different tenant screening company may do the next screening and repeat the same error.

Additionally, the tenant screening company is under no obligation to make sure that an adverse event is valid or justified. A landlord could falsely report to credit agencies that a previous tenant owes money, and regardless of the claim’s validity, this would be reported in the screening report as landlord debt. Similarly, the mere filing of a judicial eviction action, regardless of its justification and regardless of whether it resulted in an eviction, is reported as an eviction action.

The solution is to pass legislation requiring transparency, testing, and accountability: transparency so that people know what information is being used and how it is being weighed, testing to determine and correct bias in the calculation, and accountability so that consumers have meaningful and effective remedies for errors.

Here in Washington, we worked with Senator Hasegawa to introduce a first-of-its-kind algorithmic accountability bill (SB 5116) that would prohibit discrimination via algorithm and require government agencies to make transparent the automated decision systems they use. As we continue to advocate for the passage of a strong algorithmic accountability law, we encourage you to talk with your communities and lawmakers sharing concerns about the impacts of automated decision systems and supporting strong legislation. We encourage you to use the Algorithmic Equity Toolkit as a guide in those conversations.

For more information, contact [email protected].